Leaders who mislead: A tale of two movies

Over the holidays I found watching Star Wars: The Force Awakens in Imax 3-D quite stirring. I thought how wonderful it must be to be able to create one's own universe, as George Lucas and J.J. Abrahms have done. You set the stage, you choose the players, you determine the story arc, you pose how good and evil battle, you pick the winners and losers.

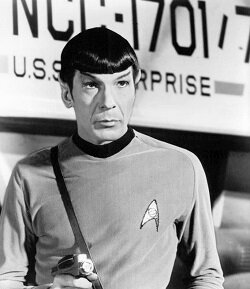

My ideal world would be less stunning than that of Star Wars; it would be more like the logic-based, rational civilization that produced Star Trek's Mr. Spock. In my ideal world, inaction or wrong action would only stem from lack of knowledge or misunderstanding. In my ideal world, the host of mental flaws, traps, errors and fallacies I have been documenting that lead us to make bad decisions and get undesirable results would not exist.

Another excellent movie I just viewed, The Big Short, is much closer to reality. It shows the world as even less ideal than I would have it. In my ideal world, deceit would not exist. Yet The Big Short paints a vivid picture of a rigged system marked by greed, naked self interest, complicity and power. The slice of life that Michael Lewis so adeptly reveals is but a snapshot of what we observe daily, the irrefutable evidence that others are constantly trying to manipulate us and pull the wool over our eyes and get their own way even when that's not in our best interest.

This problem is starkly evident in the current U.S. presidential election cycle, where an avalanche of words and positions are being issued to gain our support and diminish other candidates (with Donald Trump's pronouncements the most evident example)...and yet the evidence objectively assessed often shows the world and our options to be very different than what's being painted.

Setting politics aside, consider how every-day leaders with bad or misplaced intentions lead us and the business and civic organizations we care about astray.

A first step to improving how we make the big decisions necessary to get better outcomes in our organizations and our lives is to recognize how we are being played, kept in the dark and misrepresented. Here's a list of some ways leaders use mental flaws, traps and fallacies to mislead us. (If you are the leader and see elements of your own behavior in this list, please change now!)

Playing to our predispositions.

Leaders with malintent play on what we want to hear and our worst instincts. Leaders who mislead:

Set the bar too low or too high in terms of expectations, thereby predisposing us to make warped judgments based on those expectations. They use the anchoring effect, the tendency for us to compare and contrast only a limited set of items, to fixate us on a value or number that in turn gets compared to everything else.

Play on our natural bent to see outside criticism or information counter to what we already possess as being biased. They amplify the hostile media effect, our tendency to see a media report as being biased, especially in the case where it conflicts with our own partisan views.

Use adversarial thinking to sweep away otherwise attractive ideas and proposals. By highlighting that the idea or proposal came from an adversary, they invite us to practice reactive devaluation, to diminish or reject proposals only because they purportedly originated with an adversary.

Focus us on threats at the expense of opportunities. Our inbred instinct to avoid losses, loss aversion, causes us to weigh the prospect of losses over the possibility of gains and works against pursuit of needed change and innovation.

Know that liking leads to persuasion. They cultivate relationships and introduce attractive agents, recognizing that liking predisposes us to be influenced and persuaded by the leader or the agent.

Establishing false premises.

Leaders can deceive us by establishing false constructs. Leaders who mislead:

Draw us to their desired conclusion by selectively presenting evidence or choices. For the wrong reasons they practice reframing, an approach relevant to any situation where it is possible to elicit a different point of view or behavior by restructuring the way information is presented.

Set up false choices to make the options they desire to look more inviting. Decoy effects are deliberately presented choices not intended to be chosen, included only to make the other choices look more attractive in comparison.

Use scant evidence to claim they are right. Biased generalizing is the use of an insufficient or biased sample to make an overly strong argument or draw an unsupported conclusion.

Narrow the options to exclude valid choices. They create a false dilemma by unfairly presenting too few choices and implying that a choice must be made among this short menu.

Ignore and do not bring to our attention what does not support their view. In logic, this is called destroying the exception or the fallacy of accident and is an argument based on a generalization made when an exception to a rule of thumb is ignored.

Use the fallacy of traditional wisdom to continue practices that once may have been wise but for which the premises have now changed. Kodak's continued investment in film photography in the face of the digital revolution can in part be attributed to leaders who clung to the traditional wisdom despite changed circumstances.

Psychological manipulation.

Leaders can inappropriately influence our views and decisions by triggering and reinforcing psychological effects. Leaders who mislead:

Create dramatic and emotional descriptions to influence our thinking. They employ vivid representation of potential outcomes to misdirect us from straightforward, abstract information that may have greater value as evidence and to reduce our sensitivity to the prospect of something happening or not happening. They use color, movement and other dynamic stimuli to trigger our salience biases, to distract or engage our attention and affect our judgment. They provide bizarre or extreme cases or examples - the exception - that we naturally remember better than common, normal and regular cases and examples - the rule. Does it surprise you to learn that homicide rate in the U.S. is at a 100-year low? News stories and political speeches certainly don't promote this understanding!

Use repetition to make something seem to be true, even when it is not, and liked because it becomes familiar. They play on the illusion of truth effect, in which people are more likely take statements they have previously heard as being true, no matter if the statement is true or not. Leaders who mislead understand the power of the availability cascade, which is the self-reinforcing process in which a collective belief gains more and more plausibility through increasing repetition in conversation. They take advantage of the mere exposure effect, which is our tendency to express undue liking for something merely because of our familiarity with it.

Promote effects that shut down effective group discourse and decision making. Leaders can feed group think, encouraging the group to latch on to seeming winners and favorites. They can promote their solutions as the group view, to trigger the bandwagon effect, reinforcing our natural tendency to go with the flow. They often play into the false consensus effect, enticing us to see more consensus for our beliefs than is actually the case. This leads us to ignore the ambiguity inherent of the kind of issues that come up or the questions that we are asked and to not see that we need to make allowances for judgments different from ours because we may be seeing or defining things differently. Even when the group picks the leader, as is the case of a jury electing a foreperson, the leader whether intentionally or not more often gets what he or she thought the decision should be before deliberations. "This person given the title of foreperson is likely to speak two to three times more than the average of the remaining jurors, and the verdict is most likely going to reflect what this person thought before deliberations," according to research by Traci Feller from the University of Washington.

Build up the group at the expense of others. They tap into the ingroup bias, our tribalistic heritage, which pushes us to build strong bonds with those in our ingroup and to discount and even disdain others - and thereby overestimate the abilities and value of our group relative to others.

Self delusion.

Leaders' lack of self knowledge and biases can lead others and organizations astray. Leaders who mislead:

See themselves as competent and qualified to lead when they are not, exhibiting illusory superiority or the Dunning-Kruger effect, in which incompetent people fail to realize they are incompetent because they lack the skill to distinguish between competence and incompetence.

May indeed be competent but because of their inability to self assess falsely assume that others are equally competent when they are not.

Operate from a position of power and control that promotes overconfidence, overestimation of what they know, and excessive risk taking, the path to bad decisions.

Practice self-deception to avoid painful realities. Like the rest of us, they are prone to overestimate the control they have over outcomes and be unrealistically optimistic.

Exhibit the self-serving bias by evaluating ambiguous information in a way more beneficial to their interests than to the organization's interests. An executive who is compensated based on sales, not profitability, will tend to strive for higher sales at the sacrifice of higher profits.

Are influenced by observer effects - they see what they expect or want to see in a situation or an outcome, despite a reality that differs from expectations - and confirmation bias - they subconsciously dismiss anything that threatens their world view and surround themselves with people and information that confirm what they already think. These are reasons why the facts are often discounted along with the messenger when a whistle blower introduces contrary facts that the leader does not expect or want to hear or finds threatening.

Posing as experts or as knowing more than we do.

Whether by intent or through ignorance, leaders use their position and podium to misdirect us. Leaders who mislead:

Take on the trappings of authority - expensive clothes, big office, lavish meals and so on - to trigger compliance with their requests, as was the case in the famous Milgram obedience studies using "appeals to authority" as a method of persuasion.

Lead through claimed expertise when in fact their past success was due to luck or unique circumstances, exhibiting the so-called "expert problem," in which so-called experts outside of the realm of hard science and physical processes cannot really say much more about the future than we can.

What to do?

Anyone who has followed my posts knows that I am on a quest. The essential link between strategic thinking and strategic action - beyond the quality of the thinking and decisiveness of the action - is decision making. I am on a quest to understand why we make bad decisions and what we can do to make better decisions when it really counts.

Alas, not being Mr. Spock or having The Force at our disposal, we are highly exposed to and too easily misdirected by manipulation by leaders who mislead.

Yet, in our very human way, we can be Spock-like informed skeptics who seek more evidence to either support or disconfirm the leader's direction. We can learn about, look for and work to counter or mitigate the many psychological and social factors that contribute to misperception of the world around us and bad decision making.