“The instant of decision is madness”

Note: This post on undecidability and yet having to make a leap of faith and decide is part of a chapter, "The madness of not knowing," in my upcoming book, Big Decisions: Why we make decisions that matter so poorly. How we can make them better.

Twenty years ago in a survey of 100 prominent physicists Scottish physicist James Clerk Maxwell was voted the third greatest physicist of all time, behind only Sir Isaac Newton and Albert Einstein. In the mid-19th century, Maxwell produced the classical theory of electromagnetic radiation, bringing together electricity, magnetism, and light as different manifestations of the same phenomenon. He also developed a statistical means of describing the kinetic theory of gases, produced the first durable color photograph and produced methods for analyzing the rigidity of trusses such as those used in bridges.

In Maxwell’s thinking, what we don’t know drives scientific discovery: “Thoroughly conscious ignorance is the prelude to every real advance in science,” he observed.1

Stuart Firestein, former Chair of Columbia University's Department of Biological Sciences, amplifies Maxwell’s point in Ignorance: How It Drives Science. He writes about “knowledgeable ignorance, perceptive ignorance, insightful ignorance” as a more positive condition of knowledge, what he says is “the absence of fact, understanding, insight or clarity about something.” He notes that this communal lack of knowledge and the questions it generates is what drives science.2

'Unknown unknowns'

However, we humans often don’t access whatever knowledge does exist and we can access in our decision making. Knowing something leads us to think we know enough, even if what we know is dramatically insufficient.

Former U.S. Secretary of Defense Donald Rumsfeld famously said, “Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns – the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tend to be the difficult ones.” 3

Economist Arthur Schopenhauer said of the “unknown unknowns,” "Everyone takes the limits of his own vision for the limits of the world." And Maxwell’s contemporary, physicist Michael Faraday, coined the phrase, “ignorant of its ignorance” to describe society in general.

What Rumsfeld’s construct does not include is the thought that there are things that we think we know that are not true. Call these the things “we think we know but we don’t know.”

Overconfident in what we think we know

Image your reaction to a deadly plague that would ultimately kill half the citizens of your city. You would try to seek safety if you weren’t already quarantined, with the city gates locked to keep you inside. Even if you were to exit the city, that might not save you, as the plague was also rampant in surrounding districts and devastating cities farther off. You might avoid contact with others. You might seek potions and remedies to ward off this unexplained source of death. And you might look for someone to blame.

Psychologists Piergiorgio Battistelli and Alessandra Farneti of the University of Bologna bring to our attention a story of blame occurring during the Great Milan Plague of 1630. According to historical accounts built on by 19th century Italian novelist Alessandro Manzoni in his famous book I Promessi Sposi (“The Betrothed”), some Milanese citizens assumed that the epidemic had been caused by the so-called "greasers," who allegedly greased walls and doors around the city with a deadly substance. Battistelli and Farneti explain, “Renzo, the main character of the novel, is mistaken for a greaser” by a passer-by “because of some of his actions.” Renzo is denounced and chased by a crowd. They continue, “Manzoni then tells us that in later years people started to contest this myth of the greasers,” yet the person who had denounced Renzo argued that ‘one must have seen things.’” Manzoni made it clear that “in the mind of that alas nameless person there wasn't even the slightest doubt about his representations.” 4

Obviously, with 21st century insight we know that the plague was transmitted by infected fleas borne by rats, not by people imagined to have greased buildings with a toxic substance. Yet, we still are plagued by minds filled with things imagined, that we “must have seen.”

In fact, extensive research shows that we exhibit excessive confidence that we know the truth and “cling too fervently to beliefs that are poorly supported by evidence, adjusting t[our] beliefs too little in light of the evidence or the consequences of being wrong.” This type of overconfidence is called “overprecision,” defined by Daniel Kahneman as “our excessive confidence in what we believe we know, and our apparent inability to acknowledge the full extent of our ignorance and the uncertainty of the world we live in.” 5

Impossible knowledge

Here’s 21st century evidence of the pernicious nature of our overprecision. An article from Stav Atir and David Dunning of Cornell University and Emily Rosenzweig of Tulane University published in Psychological Science in 2015 showed that having a high perception of one’s own expertise led people to claim they had impossible knowledge. For example: Participants who rated themselves highly in financial expertise claimed that they knew about fake financial terminology. Those who rated their knowledge high in an academic area (such as biology) claimed more knowledge in nonsense concepts (e.g. meta-toxins, biosexual, retroplex). Those made to feel that their knowledge of geography was high were more likely to claim they had heard of made-up American cities (Monroe, Montana; Lake Othello, Wisconsin; Cashmere, Oregon). 6

“Epistemic arrogance” is a term coined by Nassim Nicholas Taleb. In Fooled by Randomness, he explains, “Measure the difference between what someone actually knows and how much he thinks he knows. An excess will imply arrogance, a deficit humility.” 7

Epistemic arrogance compounds our difficulty in making great decisions. As we learn more, we tend to gain even more confidence in our knowledge and underestimate uncertainty. Taleb says that rather than opening an awareness that we know so little, learning can have the opposite effect. “Our increase in knowledge [is] at the same time an increase in confusion, ignorance and conceit." We "compress the range of possible uncertain states (i.e., by reducing the space of the unknown)."

Psychologist David Dunning explains it bluntly: “An ignorant mind is precisely not a spotless, empty vessel.” It’s filled with information — all the “life experiences, theories, facts, intuitions, strategies, algorithms, heuristics, metaphors, and hunches” — our brain indiscriminately uses whatever is at hand to plaster over the intellectual blind spot. 8

We must decide based on ‘unknowledge’

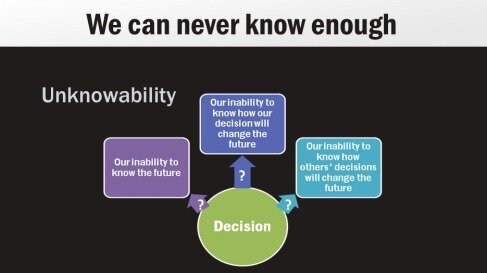

Wanting to make a rational decision presents us with an unbridgeable dilemma. We must decide, but a rational decision is impossible. We can never know enough. We hope that we are not arbitrary in our decision, but we must act when we are uncertain and our action is always contingent on more knowledge showing us a better decision.

G. L. S. Shackle, a 20th Century English economist, noted, "We can choose only among imaginations and fictions. Imagined actions and policies can have only imagined consequences, and it follows that we can choose only an action whose consequences we cannot directly know, since we cannot be eyewitness of them." Thus, Shackle used the term “unknowledge,” the absence of knowledge that, if we had it, would be potentially relevant to our decision making and therefore makes our decision making uncertain. Because of "unknowledge," Shackle described making a decision as “the focal, creative, psychic event where knowledge, thought, feeling and imagination are fused into action.” 9

'The instant of decision is madness'

Jacques Derrida, an Algerian-born twentieth century philosopher, is famously known for asserting that because of "undecidability," “Decision making is a leap of faith.”

His point is that if it were not, if everything were known to inform the decision, then it would not be a decision at all, it would just be applying rationality and calculation to give the answer. He wrote that a “decision that didn’t go through the ordeal of the undecidable would not be a free decision, it would only be the application or unfolding of a calculable process.” Thus, Derrida quotes Kierkegaard, “The instant of decision is madness.” 10

Derrida believed that a decision requires a “leap of faith” beyond the sum total of the facts, according to Jack Reynolds of Latrobe University. Derrida wrote that "not only must the person taking the decision not know everything…the decision, if there is to be one, must advance towards a future which is not known, which cannot be anticipated." He explained, “A person who knows everything does not make decisions. Decisions involve the person being moved, temporally, into the ‘unknowability of the future.'" 11

Reynolds summarizes Derrida’s “philosophy of hesitation” resulting from decisions being a leap of faith. “If a decision is an example of a concept that is simultaneously impossible within its own internal logic and yet nevertheless necessary,” then it supports our “reticence to decide…deferring, with all of the procrastination that this term implies.”

Decisions change the future in ways we cannot foresee

The future is dynamic and your decision will change it. That means that when we act on a decision, others react and yet others react to their actions. We cannot anticipate all the actions and change that our act generates. And when others act based on our reactions, we react, causing further actions by others.

This action-reaction change dynamic in decision making was labeled "double contingency" by sociologist Talcott Parsons. Sociologist Niklas Luhmann explained that double contingency occurs when two systems mutually make their own selections dependent on the decisions of the other system. He observed, "The problem is how to decide which of the two systems will make the initial choice, which can then form the basis of the response of the other system. Since both systems have established their choice as dependent on the choice of the other system, the situation becomes overloaded with indetermination and complexity and might become 'locked up.'" 12

We must act and then see

The important point for decision makers to reckon with is that the world does not hold still while we are trying to establish a preference order among decision alternatives. The upshot of the changing turf as we make decisions is that we are fooling ourselves if we believe there are clear decision options and justifications ahead of the actual decision. We must act and then we will see what comes next and our next decision and action.

"Only when we formulate strategy in the context of execution, when we are acting, will we know why we decided what we did, our preferences, and see the justification for our decision," concludes Andreas Rausche of Copenhagen Business School, “Strategy formation is thinking within (and not prior to) action.” 13

Endnotes

1 The Royal Society, "Science in the Making," James Clerk Maxwell, https://makingscience.royalsociety.org/s/rs/people/fst00040685

2 Ignorance: How It Drives Science, Stuart Firestein, Oxford University Press, 2012, ISBN-13: 978-0199828074 https://www.amazon.com/Ignorance-Drives-Science-Stuart-Firestein/dp/0199828075

3 Defense.gov News Transcript: DoD News Briefing – Secretary Rumsfeld and Gen. Myers, United States Department of Defense (defense.gov). https://archive.defense.gov/Transcripts/Transcript.aspx?TranscriptID=2636

4 Battistelli Piergiorgio and Farneti Alessandra, "When the theory of mind would be very useful," Frontiers in Psychology , Volume 6, 2015, DOI 10.3389/fpsyg.2015.01449. ISSN 1664-1078 https://www.frontiersin.org/article/10.3389/fpsyg.2015.01449

5 Thinking, Fast and Slow, Daniel Kahneman (October 25, 2011). Macmillan. ISBN 978-1-4299-6935-2. https://www.amazon.com/Thinking-Fast-Slow-Daniel-Kahneman/dp/0374533555

6 S. Atir, E. Rosenzweig, D. Dunning. "When Knowledge Knows No Bounds: Self-Perceived Expertise Predicts Claims of Impossible Knowledge." Psychological Science, 2015; DOI: 10.1177/0956797615588195

7 Fooled by Randomness: The Hidden Role of Chance in Life and in the Markets, Nassim Nicholas Taleb, Random House Trade Paperbacks, 2005 ISBN: 1400067936 https://www.amazon.com/Fooled-Randomness-Hidden-Markets-Incerto/dp/0812975219

8 "We Are All Confident Idiots," David Dunning, Pacific Standard, October 27, 2014. https://psmag.com/social-justice/confident-idiots-92793

9 Hans Landstrom and Franz Lohrke, editors, Historical Foundations of Entrepreneurship Research. Cheltenham, UK: Edward Elgar, 2010.ISBN: 978-1-84720-919-1. https://books.google.com/books?isbn=1849806942

10 "Jacques Derrida," Stanford Encyclopedia of Philosophy, July 30, 2019 https://plato.stanford.edu/entries/derrida/

11 "Jacques Derrida," Jack Reynolds, Internet Encyclopedia of Philosophy https://www.iep.utm.edu/derrida/

12 Raf Vanderstraeten, "Parsons, Luhmann and the Theorem of Double Contingency," Journal of Classical Sociology 2(1), 2002.

13 The Paradoxical Foundation of Strategic Management, Andreas Rasche, Springer Science & Business Media, Oct 25, 2007.